Are clouds having their on-prem moment

Are clouds having their on-prem moment

orignal address:Are clouds having their on-prem moment? (opens new window)

While public cloud usage continues to grow, an increasing number are also moving to on-prem private clouds (sometimes even owning and operating their own hardware).

For the better part of the last two decades, the move towards utilizing public cloud infrastructure seemed like an inevitable, one-way tidal wave. Cost to end users would fall as providers continue to scale and an array of ever more fine-grained services would allow startups to stay lean and quickly adapt to surges or disruptions in demand.

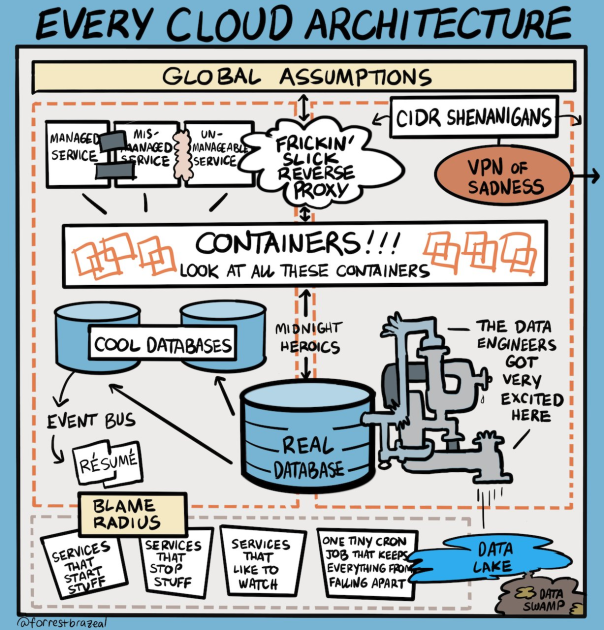

During my three years working on the Stack Overflow podcast and blog, I’ve gotten the chance to talk with lots of interesting folks working with a wealth of microservices and containers. I’ve seen the push to build infrastructure as code and the appeal of going serverless. At the same time, I’ve chatted with lots of folks in the areas of observability and service meshes, who have found a new business supporting the sprawl of interconnections that exists in modern applications.

Via https://mobile.twitter.com/forrestbrazeal/status/1612473738259316736

Via https://mobile.twitter.com/forrestbrazeal/status/1612473738259316736

Recently, however, I’ve noticed a new trend growing in parallel. Yes, adoption of public cloud continues to grow, with many companies still in the process of deciding what to migrate off local servers. Tons of folks gathereveryday to share knowledge about AWS (opens new window), Azure (opens new window), and Google Cloud (opens new window) across our Stack Overflow Collectives (opens new window).

At the same time, however, a growing number of organizations are also carving out space to repatriate work from public providers to on-prem private clouds and, for the growing world of edge computing and machine learning, going back to the future of actually owning and operating their physical hardware on-site.

# A cloud to call your own

According to a 2022 report (opens new window) by Bessemer Ventures, there has been a significant uptick in the adoption of virtual private clouds. The report suggests that, “it is becoming easier to package SaaS products and deploy them inside a customer’s virtual private cloud (VPC). This is due in part to the standardization around Kubernetes as the operating system of the cloud. This makes it easier for SaaS companies to serve a wider range of customers that may prefer to keep certain sensitive data or applications in a VPC.”

A lot of our customers using Stack Overflow for Teams Enterprise edition (opens new window) opt for this approach. We build and upgrade the platform for knowledge sharing and collaboration (opens new window), but it’s installed in a private or on-prem location and the conversations about their proprietary code clients are discussing stay privates on-prem.

Tom Limoncelli, a technical product manager on our site reliability team, has strong opinions on this trend. “Here’s what’s really happening,” he wrote to me:

a. Running your own datacenter encourages bad practices due to lack of governance and other reasons.

b. The cloud forces/encourages better practices, such as strict governance, infrastructure as code, and fully automated CD/CD pipelines.

c. People are building on-prem clouds, which emulate those best practices because they got a taste of in the cloud.

Another way to say that is… People aren’t returning to the datacenter; they’re returning to on-prem clouds because datacenters can be frustrating.

The way Limoncelli sees it, a fully DIY approach encourages bad practices. Owners tend to treat servers more like pets than cattle, adding their own customization. After decades of that, you get a datacenter that is just one big mess of bad configuration ideas, mismatched technologies, and political barriers that prevent any of that from being fixed. The smart way to move to private cloud is to be strict about standardization. In other words, no, you can’t request a special machine with a weird ethernet connection because one person thinks it’s cool.

Cloud systems, on the other hand, provide an API to request new virtual machines in minutes, instead of a manual purchase process that took months. They install racks and racks of the same hardware configuration. They use standardized machine configs, guiding users away from bespoke configs. Costs are accounted for, which often doesn’t happen in datacenters. On the software side, the move to the cloud is an opportunity to adopt automation like a CI/CD pipeline, weaning people off of manual deployments.

Users acquire resources via an API, not by a purchase order. Governance and automation are established from the start. The combination of accessing resources by a standardized API and automatically enforced governance results in a system that is more maintainable and enforces more modern practices.

# Containers will set you free

As Nick Chase argues (opens new window) over at The New Stack, Kubernetes has been a powerful enabler for companies looking to gain more control over their usage of the cloud. It’s relatively agnostic about the real or virtual hardware you choose to employ because it can do a wealth of different things with the Linux kernel as its foundation.

Kubernetes was designed to make it simpler for a small group of people to manage a large constellation of applications by abstracting the underlying hardware. Barring a setup that confines your system to a scarce resource, it offers a level of resilience that customers ten years ago turned to public cloud providers for. Along with allowing users to update systems without going offline, it also offers capabilities for monitoring of services up and downstream, something that many microservice heavy organizations are now turning to third-party observability providers for.

“Kubernetes’ superpowers change the game in important ways,” writes Chase. “If you can deploy, scale and manage the lifecycle of Kubernetes, you can use it to pave over public and private cloud infrastructures, optimize costs and overheads aggressively, and treat everything underneath Kubernetes as a commodity.”

As my colleague Ryan Donovan pointed out during a recent conversation, “being able to abstract infrastructure has enabled a lot of cloud providers, but it’s also allowed folks to have those containers located anywhere (opens new window) — within a public cloud, on a prem server in Lithuania, or a private cloud replicated across multiple locations.” Just because your infrastructure has moved to the cloud doesn’t mean you don’t care about proximity to users and the advantages that might provide you in terms of cost or latency.

Stack Overflow has taken advantage of some of these superpowers. Max Horstmann, formerly a Staff Software Engineer at Stack Overflow, now a principal software engineer on the Azure Kubernetes Service (AKS), wrote in depth about why Kubernetes might be a good choice and how we took advantage of it inside our organization. You read his article on it (opens new window) or listen to his podcast below.

“If you’re starting a new project from scratch — a new app, service, or website — your main concern usually isn’t how to operate it at web scale with high availability,” writes Horstmann. “Hence, when it comes to choosing the right set of technologies, Kubernetes (opens new window) — commonly associated with large, distributed systems — might not be on your radar right now. After all, it comes with a significant amount of overhead.”

Despite all this, he sees value in adopting it from the start. “When you’re launching something new, your focus is typically to move fast and iterate quickly based on early feedback. Scaling is something for later. K8S is a tool that, in my view, allows you to do just that because it can accelerate your build/test/deploy loop, allows you to easily deploy and instrument different instances of your app, e.g. for split testing, customer demos etc.”

If you’re lucky enough to find product market fit and start to see a surge in customer demand, Kubernetes proves valuable in this area as well. “The problems that come with scale — fault tolerance, load balancing, traffic shaping — are already handled,” says Horstmann. “At no point will you hit that moment of being overwhelmed with success; you future-proofed your app without too much extra effort.”

This comment (opens new window) from a HashiCorp’s forum sums up the advantages well: “A Kubernetes cluster is a good example of an abstraction over compute resources: there are many hosted and self-managed implementations of it on different platforms, all of which offer a common API and common set of capabilities.”

# A bridge between public and private clouds

The Bessemer report (opens new window) cites another emerging technology trend that pairs increased cloud adoption with on-prem data. “Emerging middleware platforms are making it easier to bring the power of the cloud to the data, wherever it may be. This has played out in industries like financial services, where a wave of modern fintech infrastructure helped build bridges between the cloud and legacy banking systems. We are seeing similar bridges being built in other large industries like supply chain, logistics, and healthcare to bring the power of the cloud to these on-premise data sources.”

It’s important to define what we mean by “middleware” here. As Red Hat points out, the term dates back to a 1968 NATO conference (opens new window) on software engineering, where it referred to code that sat between the assembler/compiler at the bottom of the pyramid and the application logic at the top. In the world of hybrid cloud, middleware refers to an evolved version of this same idea. As Asanka Abeysiinghe, Chief Tech Evangelist at WSO2 explains in a blog (opens new window), this can look like, “mega clouds that provide infrastructure as a service (IaaS)-enabled middleware capabilities via APIs, which have become the new DLLs. So, for example, message queues, storage and security policies are open for developers to consume in applications running on the IaaS (Infrastructure-as-a-Service).”

Outside the big public cloud providers, Abeysinghe sees other alternatives catching on. “Kubernetes (opens new window) addresses the issue of cloud lock-in by bringing an open standard to the cloud-native world, and it enables basic middleware capabilities as components (opens new window). In addition, the Cloud Native Computing Foundation (CNCF (opens new window)) brings a rich set of Kubernetes-centric middleware, and you can find them in the CNCF technology landscape (opens new window). However, if the middleware capabilities provided by Kubernetes and the CNCF are not enough for your application development, you can add custom resources by defining them in a custom resource definition (CRD (opens new window)) because Kubernetes is built using open standards.”

When I spoke with Abeysinghe for this article, he was quick to point out that there was no data to indicate a trend of companies moving fully away from the cloud, far from it. There are still more folks migrating onto the public cloud than off it. He estimates that 80 percent of activity is still focused on the traditional shift from local to public cloud, with another 20 percent moving in the opposite direction. But that 20 percent is important, precisely because it flows against the prevailing tide we’ve seen over the last decade.

Abeysinghe believes that there is a realization, especially at organizations with a lot of legacy hardware infrastructure, that they now have a lot of machinery sitting idle. If you’re a big bank with decades of mainframes at your disposal, utilizing only five percent of that doesn’t make much sense. “Kubernetes lets you run a private cloud that better utilizes your existing on-prem hardware.” Cloud bursting technology (opens new window) lets you shift to third party resources when your local hardware is maxing out.

Not to be left out of the game, public cloud providers now offer physical server racks (opens new window) to clients who have jobs that are more efficient on-prem, or need to remain in-house for security and compliance reasons. Companies that once helped to migrate companies off local hardware now offer server-racks-as-a-service bundled with your public cloud offering, a truly full circle moment for the evolution of compute.

# Bringing AI models in-house

One area where this trend seems particularly strong is among companies focused on artificial intelligence that work with large data sets and have created their own models. “Big cloud GPU compute is very expensive, whether it’s for training or for inference,” says Dylan Fox, founder and CEO at Assembly AI (opens new window), a startup that provides AI-as-a-service to companies that are seeking natural language capabilities in their offerings but don’t want to build the models or hire a team in-house.

“We do most of our training in on-prem instances. We have a couple hundred A100 NVIDIA cards, and we recently just purchased like a couple hundred more that we have for on-prem instances used to train.” The crypto winter has been a blessing for this market, as a glut of GPUs has come onto the secondary market and prices for new and used hardware have fallen.

As David Linthcium wrote over at InfoWorld (opens new window):

Companies are looking at other, more cost-effective options, including managed service providers and co-location providers (colos), or even moving those systems to the old server room down the hall. This last group is returning to “owned platforms” largely for two reasons.

First, the cost of traditional compute and storage equipment has fallen a great deal in the past five years or so. If you’ve never used anything but cloud-based systems, let me explain. We used to go into rooms called datacenters where we could physically touch our computing equipment — equipment that we had to purchase outright before we could use it. I’m only half kidding.

When it comes down to renting versus buying, many are finding that traditional approaches, including the burden of maintaining your own hardware and software, are actually much cheaper than the ever-increasing cloud bills.

Second, many are experiencing some latency with cloud. The slowdowns happen because most enterprises consume cloud-based systems over the open internet, and the multi-tenancy model means that you’re sharing processors and storage systems with many others at the same time. Occasional latency can translate into many thousands of dollars of lost revenue a year, depending on what you’re doing with your specific cloud-based AI/ML system in the cloud.

It’s not just small AI startups that want to crunch a lot of data at a low latency with homegrown models. Here’s an eye-opening quote from Protocol (opens new window). “The on-prem trend is growing among big box and grocery retailers that need to feed product, distribution, and store-specific data into large machine learning models for inventory predictions, said Vijay Raghavendra, chief technology officer at SymphonyAI, which works with grocery chain Albertsons.”

Raghavendra left Walmart in 2020 after seven years with the company in senior engineering and merchant technology roles. “This happened after my time at Walmart. They went from having everything on-prem, to everything in the cloud when I was there. And now I think there’s more of an equilibrium where they are now investing again in their hybrid infrastructure — on-prem infrastructure combined with the cloud,” Raghavendra told Protocol. “If you have the capability, it may make sense to stand up your own [co-location data center (opens new window)] and run those workloads in your own colo, because the costs of running it in the cloud does get quite expensive at certain scale.”

Chick-fil-A had a similar experience. In a blog written (opens new window) by Brian Chambers, the company’s head of Enterprise Architecture, he noted that, “In researching tools and components for the platform, we quickly discovered existing offerings were targeted towards cloud or data center deployments. Components were not designed to operate in resource constrained environments, without dependable internet connections, or to scale to thousands of active Kubernetes clusters. Even commercial tools that worked at scale did not have licensing models that worked beyond a few hundred clusters. As a result, we decided to build and host many of the components ourselves.”

Their solution allowed a DevOps Team and Smart Device Support to deploy, build, and update to thousands of restaurants.

# Cloud, with control

Total spending on cloud computing is already enormous and still projected to grow over 20% this year, closing in on a half a trillion dollars. But it will be a far more varied and nuanced period of growth. “Cloud is the powerhouse that drives today’s digital organizations,” said Sid Nag (opens new window), research vice president at Gartner. “CIOs are beyond the era of irrational exuberance of procuring cloud services and are being thoughtful in their choice of public cloud providers to drive specific, desired business and technology outcomes in their digital transformation journey.”

After a decade or more spent moving away from server racks, companies are finding there can be advantages to running local infrastructure for certain kinds of compute. There is also, perhaps, a generational shift at work. The engineers who cut their teeth building big public clouds inside large tech companies see now moving on to create startups or take senior roles at smaller companies that specialize in a subset of cloud offerings. What’s old is new again, but with a vast variety of new flavors and permutations to choose from.